What is Emotion AI?

What Is Emotional Artificial Intelligence?

Have you ever dreamed of a world where machines could understand your emotions and change their behavior to match your feelings? Your virtual assistant would have the power to understand your tone of voice and offer empathetic responses to your interactions. It sounds like the basis of the latest sci-fi blockbuster, but that future is now. It’s known as emotional artificial intelligence, and it’s the newest frontier in machine learning.

Emotional artificial intelligence, also called Emotion AI or affective computing, is being used to develop machines that are capable of reading, interpreting, responding to, and imitating human affect—the way we, as humans, experience and express emotions. What does this mean for consumers? It means that your devices, such as your smartphone or smart speakers, will be able to offer you interaction that feels more natural than ever before, all by simply reading the emotional cues in your voice.

Bringing Emotionally Intelligent Machines to Life

Emotional intelligence is becoming a hot trend for many companies, who are seizing the opportunity to use self-awareness to connect with consumers and talent alike. Expressing, regulating, and understanding emotions is a deeply human experience, and many companies are looking to grow the emotional intelligence of their culture and structure to keep up with the demand for meaningful commitment with a purpose.

Similarly, programmers are looking for ways to incorporate emotional intelligence into chatbots, virtual assistants, and other computer systems. Chatbots are one of the fastest-growing marketing trends, with 9 out of 10 consumers expressing that they want the ability to message with brands. As a result of meeting these demands, many companies are turning to automated chatbots driven by AI to help consumers get the information they need.

As our reliance on AI grows, so does the need for emotionally intelligent AI. It’s one thing to ask your virtual assistant to read you today’s game scores, but it’s entirely another thing to entrust your aging parents to the care of an AI-driven bot. Currently, AI may be able to do incredible things, like diagnose medical conditions and outline treatments, but it still needs emotional intelligence to communicate with patients in a more humane way.

Programmers are not only looking for ways to improve our lives through automation, but they’re also looking for ways to help automation connect with how we experience our lives—and emotion AI is how they’re doing just that.

Video: How a medical robot can help an elderly with his medication while recognising how he feels and predicting his behaviour.

How Does Emotion AI Work?

Affective computing is a rapidly growing field, and companies at the forefront of the field are working to develop artificial intelligence that not only completes tasks but understands the deeper meaning behind communicating with humans.

At Behavioral Signals, for instance, we’re leveraging enormous amounts of data and machine learning to develop behavior- and emotion-savvy engines to build connected, emotionally intelligent AI products upon. Whether creating an interactive smart toy for children, developing truly revolutionary virtual assistant applications for businesses, or programming robots to make caring for loved ones a little easier, our work in emotion AI is intended to supercharge user experience.

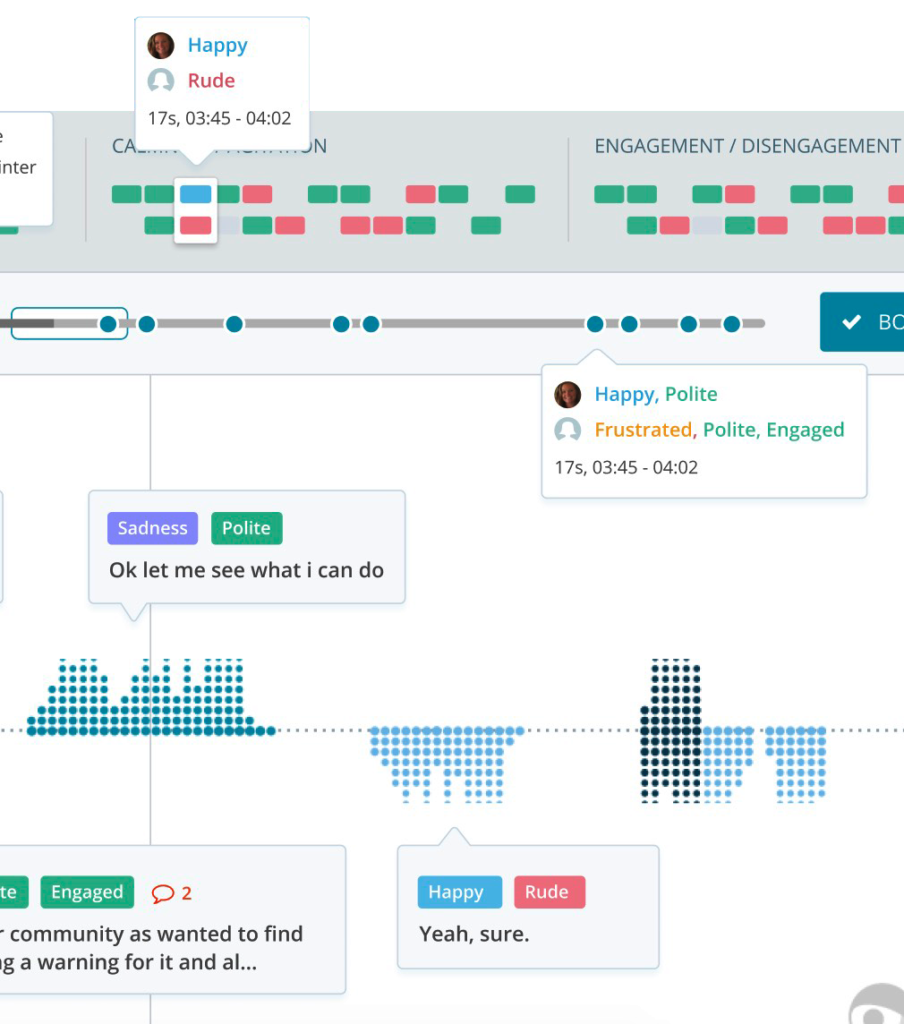

Emotionally intelligent AI relies on user-generated data to catalog and compare reactions to certain stimuli, such as videos or phone conversations and uses machine learning to transform this data into recognition of human emotion and behavioral patterns. This, in turn, can be leveraged by call centers that can use this knowledge to understand both agents’ and customers’ needs to provide a better consumer experience. Emotion AI can provide a more in-depth look into the micro-emotional reactions users have during their experiences, providing valuable feedback for researchers.

Emotion AI, like our OliverAPI can provide, analyzes the many modalities through which humans express emotion, such as tone of voice, word choice, and engagement, and uses this data to formulate responses and reactions that mimic empathy. Emotionally intelligent AI strives to provide users with an experience that is like interacting with another human.

We’ve spent years researching and gathering data on the way humans communicate emotion to pioneer the field of Behavioral Emotional Processing and look beyond what a person merely says. We’re working on analyzing what they said, and more importantly, how they said it. We’ve applied this research to AI development to create an API that can understand behavioral signals and interpret these signals to provide insight into the experience of the user—all based on voice.

Image above: meilo application, capturing and measuring emotions from speech

Our technology (available via the Oliver API or as a product for call centers via meilo) can analyze the behavioral signals found in the voices of users, analyze these signals, and produce easy-to-read reports that can help you and your team understand much more about different KPIs, such as call success, customer satisfaction, propensity for a specific action, and agent success.

Discover Emotion AI for Yourself

Affective computing has a nearly endless range of applications available, and our team at Behavioral Signals is working hard to lay the foundation of emotion AI. If you want to know more, we recommend that you start with our detailed report on how user behavioral signals are translated into emotionally intelligent AI.

Still have questions? Get in touch with our team by contacting us online.