Voice Deepfakes: The Next Frontier in Cybersecurity

Image by jacqueline macou from Pixabay

Amidst the fervor surrounding new applications for artificial intelligence are the very real implications of that technology and what it can do. What was once science fiction is quickly becoming reality, and the cybersecurity implications of technology able to imitate and emulate the faces and now voices of anyone with sufficient data to draw from are vast.

Deepfakes represent a rapid acceleration of a century-old concern—if we can truly believe what we see and hear when we are not there to see and hear it. Photo manipulation, fake audio recordings, and the risk of false video have been around since those technologies first arrived, but only now has the technology developed to the point that AI can construct fake images and voices for existing people with the right reference material, and even more concerning, these deepfakes are becoming more difficult to spot.

From a wave of deepfake videos featuring MrBeast, Tom Hanks, Oprah, and Elon Musk last fall to the recent surge in deepfake pornography being distributed of famous people like Taylor Swift, it’s becoming increasingly possible to make fake videos of real people with AI. While these videos are not perfect, and they can be quickly debunked by the people featured in them, the prevalence of the technology and the use of voice deepfakes to streamline the process are making it more difficult to keep up.

The Complicated Nature of Voice Deepfakes

Dozens of deepfake tools are freely available on GitHub, and the tools are spreading rapidly. Low-cost resources are available to commission deepfake videos, and voice cloning can be setup in minutes. Moreover, many companies lack the technology to detect fake videos and audio clips, a shortcoming that is coming into full profile as we enter an election year.

In one of the most prominent examples of voice deepfakes so far, a series of robocalls imitating the voice of President Joe Biden were made in New Hampshire. The calls instructed voters not to cast their ballots, directly interfering with the presidential primary.

While many headlines focus on video deepfakes, which look and feel more insidious, voice deepfakes are easier to produce, require less data input, and can be filtered to be intrinsically more disruptive. Bots and support services offering voice deepfakes start at just $10 per month and have reached a level of sophistication that is directly impacting consumers, voters, and businesses.

While the highest quality voice cloning is not an overnight process, requiring extensive training and calibration to match an existing voice, many tools are streamlining the process, and in some applications, a “close enough” clone is enough to fool victims. As the computational power needed to clone a voice is reduced and the algorithms become more sophisticated, it becomes easier to clone voices to a higher fidelity in less time and with less data.

The Security Implication of Deepfakes

Voice deepfakes offer a number of security challenges first and foremost among them being identity theft. While many institutional systems use personal identifying information to gate access to things like financial accounts, thieves have long looked for ways around such blockades. Hacking a password isn’t necessary if you can get the account owner to give away things like security questions freely. Social engineering and phishing campaigns account for 22% of data breaches, and 91% of all cyberattacks start with a phishing email. A recent NYT profile demonstrated how scammers are using voice deepfakes to attempt to scam banks, specifically credit card call centers. The author cites the infamous 60 Minutes episode in 2023 in which a consultant was able to clone the anchor’s voice and get an employee to provide him with their passport number in just a few minutes.

The high success rate of social engineering attacks has led many companies to offer training on how to handle emails requesting personal information. But it becomes more difficult when those emails turn into phone calls or voicemails using the voice of someone familiar—a boss, coworker, or family member who forgot their password and just needs to get in real quick.

Beyond identity theft, there is the risk that comes from false advertising. Deepfakes are already being used to emulate celebrities like Elon Musk, Joe Rogan, Oprah Winfrey, and many others. While the FTC has clear guidelines about the legality of false endorsements and recently announced new AI impersonation guidelines, scammers are accelerating their use of deepfake technology. Hearing a famous voice on a podcast, in a robocall, or in the background of a video can have a profound impact on building trust in what might otherwise be a scam, such as the growing number of deepfake background voices being used on YouTube to add credibility to questionable products.

Finally, there is the socio-political impact of voice deepfakes. More than a simple security risk, these deepfakes threaten democratic processes and the integrity of elections. From Ron DeSantis’ campaign using deepfake images of Donald Trump last summer to the AI voice clone of Joe Biden used in New Hampshire in January, Pandora’s box is open. As a result, the FEC is considering policies to limit or explicitly block the use of generative AI, such as deepfakes in political ads. Additionally, 32 states have put forth bills to regulate deepfakes in politics, and Meta and Google have both updated their AI deepfake policies for political ads, but none of this will stop bad actors from using the technology as long as it remains difficult to detect.

Detecting and Responding to Voice Deepfakes

In the United States, there are no federal laws regulating the use of deepfake technology. Despite proposed bills such as the No Artificial Intelligence Fake Replicas And Unauthorized Duplications (No AI FRAUD) Act, which would make it illegal to create digital depictions of a person (alive or dead) without consent, there has been little movement on these issues. Eleven states have passed some form of legislation related to deepfakes, with California issuing a ban on deepfakes in election season and allowing victims of deepfakes to sue.

While most of the world is still just responding to the impact of deepfake technology, the EU recently passed the AI Act, which establishes several requirements for the use of the technology. While legislation is likely to continue to be implemented, much of it focuses on the impact of deepfakes on the victims of the technology. This is because it is inherently difficult to detect and mitigate deepfakes proactively. The law essentially accepts that it will occur and looks for how to protect those who are impacted.

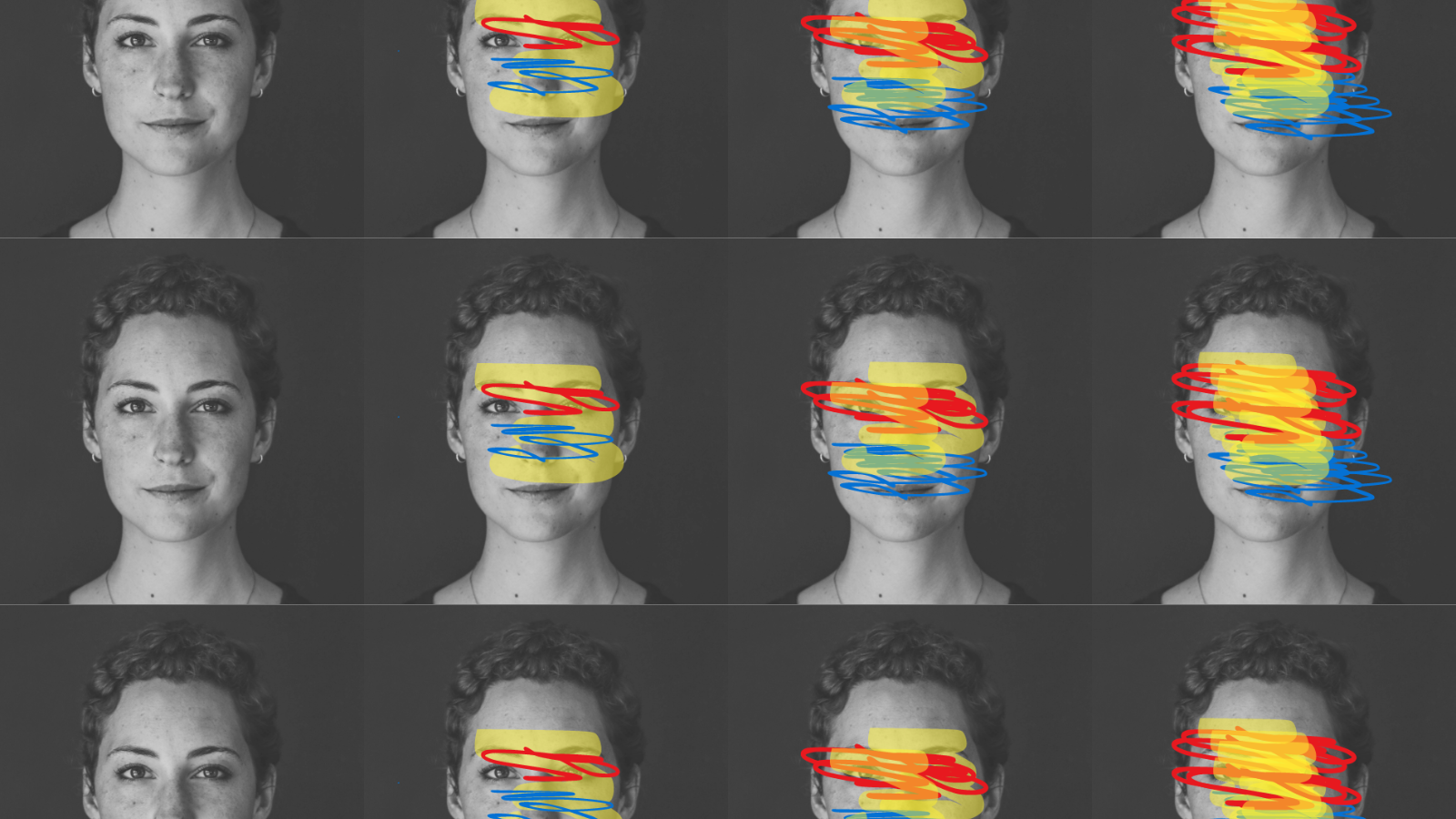

To truly respond to deepfakes, we need AI technology that proactively tracks, detects, and mitigates the threat. Behavioral Signals has developed patent-protected technology to analyze key signatures in the human voice that allow for deepfake voice detection. The advanced AI detection technology built into Behavioral Signals supports more robust voice authentication, detecting variances from natural speaking voices and layers that might indicate generative AI use. Systems like this will become integral in ensuring greater security and protecting against voice-based fraud at scale.

Preparing for What Comes Next

Voice deepfake technology will only become more advanced, less expensive, and easier to utilize. Legislation to protect its victims is crucial, but so too is the technology needed to ensure the tools can be used safely and effectively. Similar to the advent of the commercial Internet, safeguards and protections are needed to maximize the benefits for its users, reduce the risk of fraud and direct harm, and allow for a more robust use of developing technologies in ways that will benefit society.